PersonaLive!

Expressive Portrait Image Animation for Live Streaming

A real-time and streamable diffusion framework capable of generating infinite-length portrait animations on a single 12GB GPU.

A real-time and streamable diffusion framework capable of generating infinite-length portrait animations on a single 12GB GPU.

Generate portrait animations in real-time, perfect for live streaming applications.

Stream-ready architecture for seamless integration with broadcasting platforms.

Generate unlimited duration animations without memory constraints.

Run on consumer-grade GPUs with just 12GB VRAM requirement.

See PersonaLive in action with various portrait styles

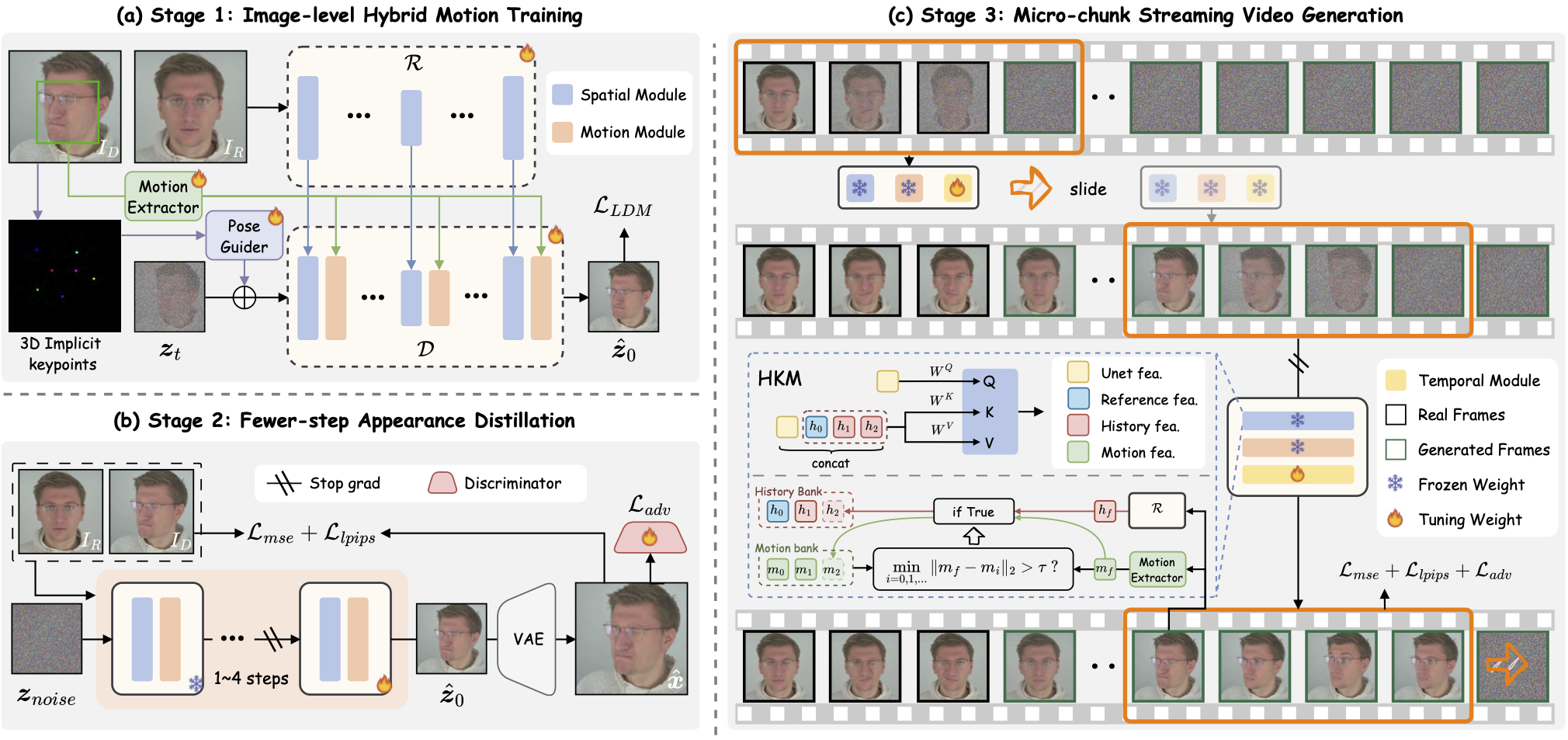

How PersonaLive achieves real-time portrait animation

PersonaLive leverages a novel streaming diffusion architecture that enables real-time portrait animation generation. The framework combines:

Everything you need for live portrait animation

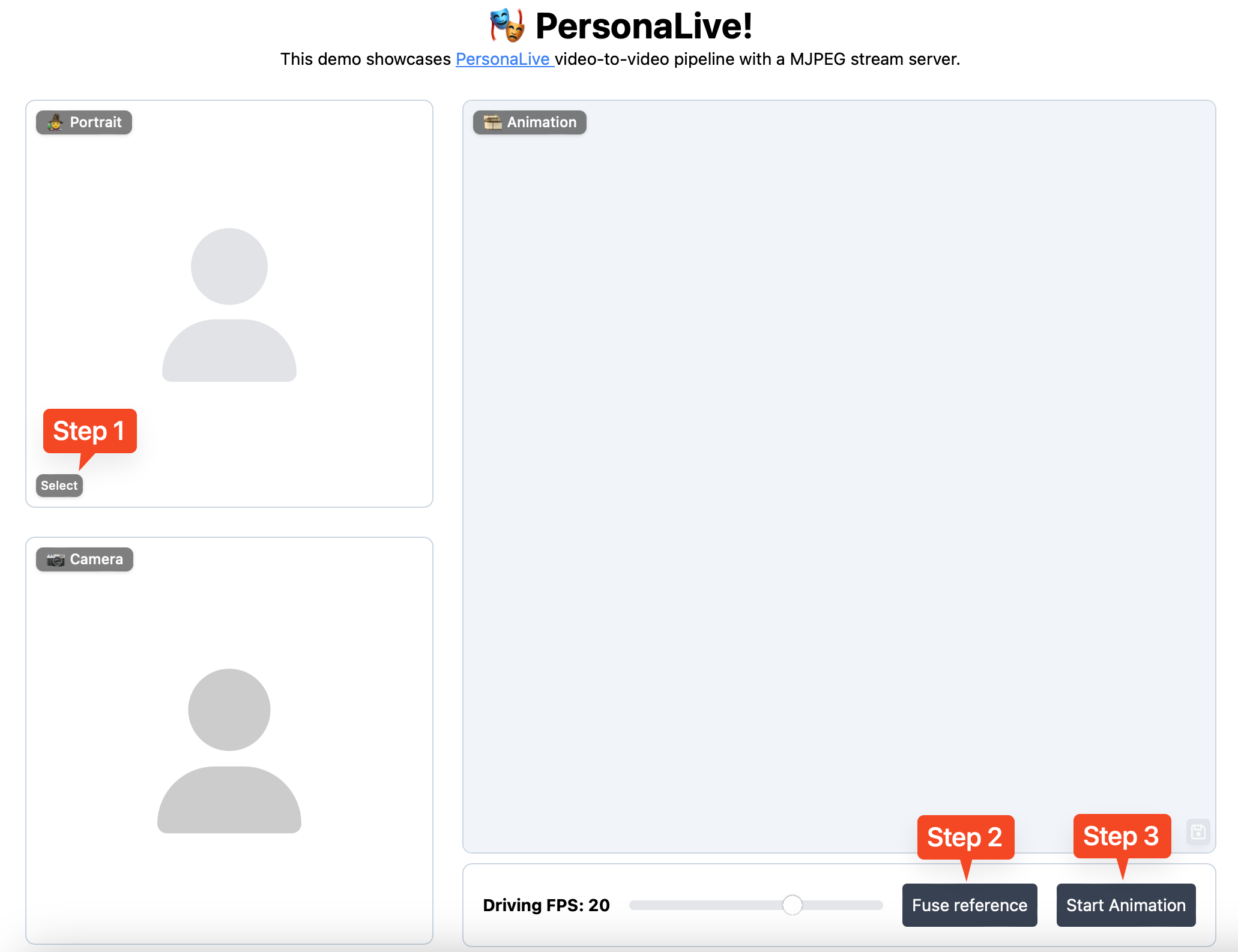

Easy-to-use web interface for real-time streaming. Upload image, fuse reference, and start animating!

Full ComfyUI integration for flexible workflow customization and advanced users.

Optional TensorRT conversion for 2x faster inference speed.

Generate long videos offline with streaming strategy on 12GB VRAM.

Maintains original image style and identity throughout animation.

Simple Python API for integration into your own applications.

Get up and running in minutes

# Clone the repository

git clone https://github.com/GVCLab/PersonaLive

cd PersonaLive

# Create conda environment

conda create -n personalive python=3.10

conda activate personalive

# Install dependencies

pip install -r requirements_base.txt# Automatic download

python tools/download_weights.py# Offline inference

python inference_offline.py

# Online streaming (WebUI)

python inference_online.py --acceleration xformersThen open http://localhost:7860 in your browser

Upload Image ➡️ Fuse Reference ➡️ Start Animation ➡️ Enjoy! 🎉

@article{li2025personalive,

title={PersonaLive! Expressive Portrait Image Animation for Live Streaming},

author={Li, Zhiyuan and Pun, Chi-Man and Fang, Chen and Wang, Jue and Cun, Xiaodong},

journal={arXiv preprint arXiv:2512.11253},

year={2025}

}